Deep Learning Explained with A Step-by-Step Guide

Introduction of Deep Learning’s Relevance and Transformative Potential

In an era where technology evolves at a breathtaking pace, deep learning stands as a beacon of progress, a tool that is reshaping our world. It’s an advanced form of machine learning, a subset of artificial intelligence (AI) that mimics the workings of the human brain in processing data and creating patterns for decision-making. Its transformative potential is immense, extending its tendrils into various sectors, from healthcare to finance, altering the way we live, work, and interact.

This guide is crafted for those at the start of their deep learning journey. Whether you are a curious student, an aspiring data scientist, or just someone intrigued by the wonders of AI, this guide aims to illuminate the path, transforming complex concepts into digestible insights.

Uncover the intricacies of system design in our guide, WhatsApp System Design.

Opening to the Core of Deep Learning

What is Deep Learning?

Deep learning is an AI function that imitates the workings of the human brain in processing data through a layered structure of algorithms known as neural networks. It’s a step beyond traditional machine learning, offering enhanced capabilities and sophistication. While machine learning uses algorithms to parse data, learn from it, and make decisions, deep learning structures algorithms in layers to create an artificial neural network that can learn and make intelligent decisions on its own.

The concept of neural networks isn’t new; it dates back to the 1950s. However, it’s in the last decade that deep learning has surged in prominence, fueled by the availability of large data sets and powerful computing resources. Today, it’s at the heart of many cutting-edge technologies and applications, from voice recognition systems like Siri and Alexa to powerful image recognition and autonomous vehicles.

Deep Learning vs. Traditional Techniques

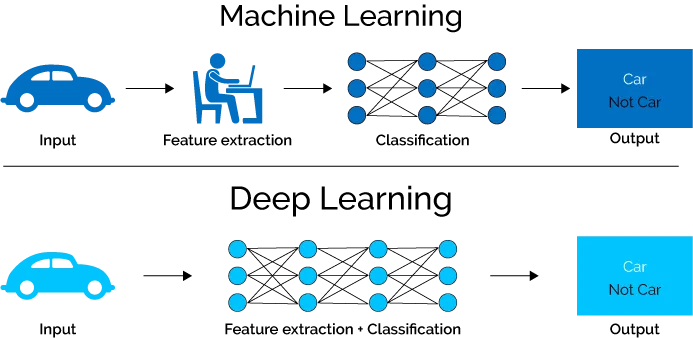

Deep learning’s strength lies in its ability to process large volumes of unstructured data, learning from it in a way that mimics human cognition. Traditional machine learning techniques, while powerful, often require structured data and can struggle with tasks like image and speech recognition, where deep learning excels.

Figure 1: Difference between Deep Learning and Machine Learning. Source: https://towardsdatascience.com/why-deep-learning-is-needed-over-traditional-machine-learning-1b6a99177063

While deep learning offers unparalleled strengths in handling complex, unstructured data sets, it’s not without its challenges. Its need for large amounts of training data, significant computational power, and the often opaque nature of its decision-making process (sometimes referred to as the “black box” problem) are points of consideration.

Exploring Deep Learning Applications

Transforming Industries with Deep Learning

Deep learning algorithms are used in healthcare for personalized treatment recommendations and diagnostic tools, like identifying cancerous tissues in medical imagery. In finance, it aids in fraud detection and algorithmic trading, analyzing large volumes of transaction data to identify patterns.

It’s also embedded in everyday technologies: the recommendation systems on Netflix and YouTube, the facial recognition in your smartphone, and the virtual assistants in our homes are all powered by deep learning algorithms.

Deep Learning in Research and Innovation

One notable example is AlphaFold, a program developed by DeepMind, which has made groundbreaking advances in protein folding, a key to understanding biological processes and developing new medicines.

The potential for deep learning in solving complex global challenges, from climate modeling to advancing renewable energy technologies, is vast. Ongoing projects in AI labs around the world hint at a future where deep learning plays a central role in scientific breakthroughs.

Tit-Bit: Did you know? Deep learning models, especially Convolutional Neural Networks (CNNs), have achieved human-level accuracy in tasks like image classification!

Learn about the Hadoop ecosystem for beginners at Hadoop Ecosystem for Beginners.

Deep Learning Techniques and Frameworks

Overview of Deep Learning Techniques

Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), etc.

Deep learning’s prowess comes from its diverse range of techniques, each suited for different types of problems. Let’s dive into two fundamental ones:

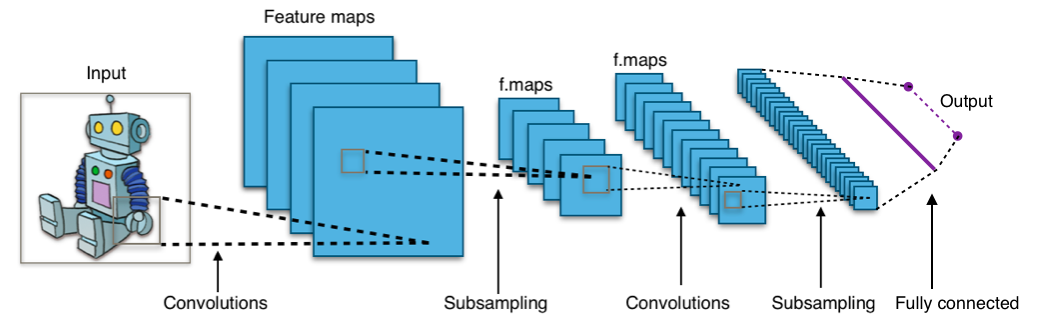

1. Convolutional Neural Networks (CNNs):

Predominantly used in image processing, CNNs consist of layers that systematically apply filters to input data, extracting features like edges in the initial layers and complex features like shapes and objects in deeper layers.

Mathematical Concept:

A CNN layer transforms the input volume into an output volume of neuron activations. If we denote the input volume as I, the filter as F, and the output volume as O, then for a given position {xy} in the filter, the neuron activation O_{xy} is given by:

O_{xy} = ∑ (I * F) + b

Where b is the bias.

Figure 2: Diagram of a typical Convolutional Neural Network (CNN). Source: https://en.wikipedia.org/wiki/Convolutional_neural_network#/media/File:Typical_cnn.png

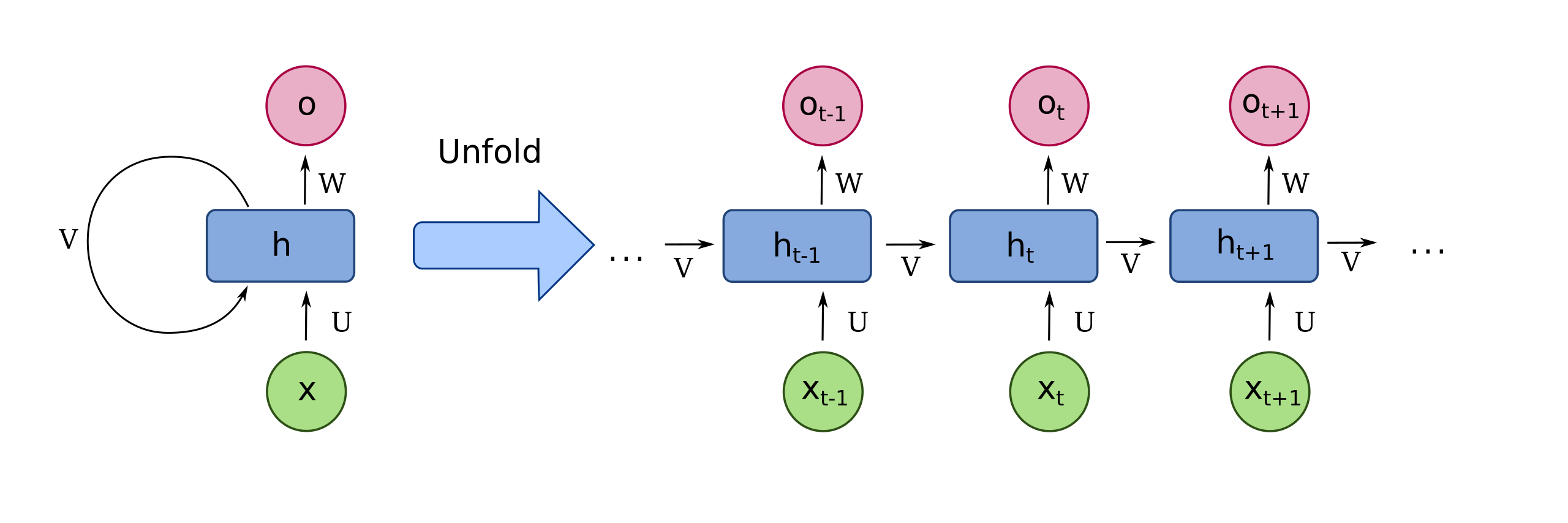

2. Recurrent Neural Networks (RNNs):

Ideal for sequential data like speech or text, RNNs process inputs sequentially, maintaining an internal memory of previous inputs to inform the current output. This makes them powerful for tasks like language translation.

Figure 3: Diagram of a typical Recurrent Neural Network (RNN). Source: https://upload.wikimedia.org/wikipedia/commons/thumb/b/b5/Recurrent_neural_network_unfold.svg/2560px-Recurrent_neural_network_unfold.svg.png

Python Example: Here’s a snippet to create a simple RNN in Python using TensorFlow:

Python

import tensorflow as tf

model = tf.keras.models.Sequential([

tf.keras.layers.SimpleRNN(50, return_sequences=True, input_shape=[None, 1]),

tf.keras.layers.SimpleRNN(50),

tf.keras.layers.Dense(1)

])

model.compile(optimizer=’adam’, loss=’mean_squared_error’)

Selection Criteria for Different Problems

Choosing between these techniques depends on the nature of your data and the problem at hand. For image-related tasks, CNNs are generally preferred, while RNNs are more suited for time-series or textual data.

Navigating Deep Learning Frameworks

Introduction to TensorFlow, Keras, and Others

Frameworks like TensorFlow and Keras have democratized deep learning, providing accessible tools to build complex neural networks. TensorFlow, developed by Google, is renowned for its flexibility and scalability, while Keras, built on top of TensorFlow, offers a more user-friendly interface for rapid prototyping.

Selecting the Right Framework for Your Project

Your choice of framework hinges on your project’s requirements:

- TensorFlow: Best for large-scale, complex projects.

- Keras: Ideal for beginners and smaller projects due to its simplicity.

- PyTorch: Another popular choice, known for its dynamic computational graph, making it a favorite among researchers.

Tip: Start with Keras if you’re new to deep learning; its simplicity will help you grasp the basics without being overwhelmed by complexity.

Gain insights on system design approaches at Tips Approach to Tackle System Design.

Getting Started with Deep Learning

Preparing for Your Deep Learning Project

Before diving into deep learning, certain prerequisites are essential:

- Basic Understanding of Programming: Knowledge of Python is highly beneficial, as it’s the most common language used in deep learning.

- Mathematical Foundations: Familiarity with linear algebra, calculus, and probability is crucial. These mathematical concepts are the backbone of how neural networks learn and make predictions.

- Computational Resources: Access to a decent computer with a good GPU (Graphical Processing Unit) is recommended for training models efficiently.

Setting Up the Development Environment

Setting up involves:

- Installing Python: Ensure you have Python installed on your machine.

- Setting Up Libraries: Install libraries like TensorFlow, Keras, or PyTorch. For TensorFlow and Keras, you can use:

Bash

pip install tensorflow

- Integrated Development Environment (IDE): Use an IDE like Jupyter Notebook or Google Colab for writing and testing your code.

Implementing Your First Neural Network

Step-by-Step Guide to Building a Simple Neural Network

Let’s build a basic neural network for classifying handwritten digits using the MNIST dataset:

1. Import Libraries:

Python

import tensorflow as tf

from tensorflow.keras.datasets import mnist

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten

2. Load and Prepare Data:

Python

(train_images, train_labels), (test_images, test_labels) = mnist.load_data()

train_images = train_images / 255.0

test_images = test_images / 255.0

3. Build the Neural Network:

Python

model = Sequential([

Flatten(input_shape=(28, 28)),

Dense(128, activation=’relu’),

Dense(10, activation=’softmax’)

])

4. Compile the Model:

Python

model.compile(optimizer=’adam’, loss=’sparse_categorical_crossentropy’, metrics=[‘accuracy’])

5. Train the Model:

Python

model.fit(train_images, train_labels, epochs=5)

6. Evaluate the Model:

Python

test_loss, test_acc = model.evaluate(test_images, test_labels, verbose=2)

print(‘\nTest accuracy:’, test_acc)

Explanation of Code and Model Architecture

- Loading Data: The MNIST dataset, a collection of 60,000 handwritten digits for training and 10,000 for testing, is used.

- Preprocessing: Images are normalized to a scale of 0 to 1 by dividing by 255.

- Model Architecture: The model consists of a Flatten layer to convert 2D images to a 1D array, followed by two Dense layers. The first Dense layer has 128 nodes (or neurons) with ReLU activation, and the second is a 10-node softmax layer that returns an array of 10 probability scores summing to 1.

Tip: Use Google Colab for free access to GPUs for training more complex models.

Explore the cutting-edge techniques in LSTM Networks at Learn everything there is to LSTM Network from What is LSTM and Its Applications?

The Science Behind Deep Learning

Understanding the Mathematics

At the heart of deep learning lies a solid foundation of mathematics, particularly linear algebra and calculus. Understanding these is crucial:

1. Linear Algebra:

It’s used in representing data and operations in neural networks. For instance, data points can be represented as vectors and the weights of a neural network as matrices. Operations like matrix multiplication are fundamental in how layers of neurons interact.

2. Calculus:

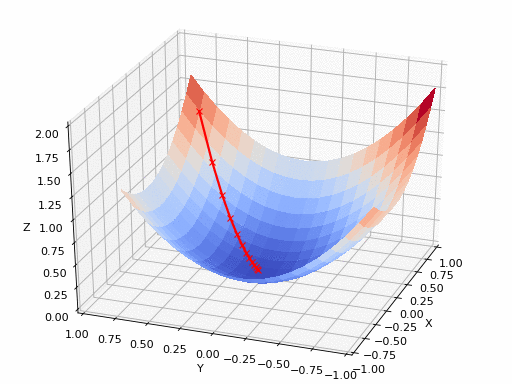

Specifically, differential calculus is used in optimizing neural networks. The process of training a network involves finding the minimum of a loss function, which is achieved using techniques like gradient descent.

Mathematical Concept – Gradient Descent:

The goal is to minimize the loss function L. If w represents the weights of the network, the update rule in gradient descent is:

w := w – α ⋅ ∇_w L(w)

Here, α is the learning rate, and ∇_w L(w) is the gradient of the loss function with respect to the weights.

Figure 4: Gradient Descent in action. Source: https://machine-learning.paperspace.com/wiki/gradient-descent

The Role of These Concepts in Model Training and Optimization

These mathematical concepts allow the neural network to learn from data iteratively. By adjusting the weights through gradient descent, the network improves its predictions over time.

Training Deep Learning Models

In-depth Look at the Training Process, Including Loss Functions and Optimizers

Training a deep learning model involves several key steps:

- Forward Propagation: The input data is passed through the network, layer by layer until it reaches the output layer.

- Calculating Loss: The difference between the network’s prediction and the actual target values is calculated using a loss function like mean squared error for regression tasks or cross-entropy for classification.

- Backpropagation: The loss is propagated back through the network, calculating the gradient of the loss function with respect to each weight.

- Updating Weights: The weights are updated using an optimizer-like gradient descent.

Python Code Example:

Here’s a snippet showing how a model is trained using TensorFlow:

Python

model.fit(train_images, train_labels, epochs=5)

This code trains the model on the training data (`train_images` and `train_labels`) for five epochs, automatically handling forward propagation, loss calculation, backpropagation, and weight updates.

Handling Challenges like Overfitting and Underfitting

- Overfitting: Occurs when a model learns the training data too well, including the noise and outliers, leading to poor performance on new data. Techniques like dropout, regularization, and using more data can help mitigate overfitting.

- Underfitting: Happens when a model cannot capture the underlying trend of the data. This can be addressed by increasing model complexity, training for more epochs, or providing more training data.

Tip: Regularly test your model’s performance on a validation set to monitor for overfitting.

Revolutionize your SQL knowledge with What are Indexes in SQL and Why Do We Need Them?

Pros and Cons of Deep Learning

Advantages of Deep Learning

Deep learning has revolutionized many aspects of technology and research, offering several significant advantages:

- Handling Complex Data: Deep learning models excel at processing and making sense of complex, unstructured data like images, audio, and text.

- Self-Feature Extraction: Unlike traditional machine learning models, deep learning models automatically identify the features needed for classification or prediction, eliminating the need for manual feature extraction.

- Continuous Improvement: As more data is provided, deep learning models continue to improve their performance, learning from each new piece of information.

- Versatility: These models can be adapted and applied to a wide range of industries and problems, from healthcare diagnostics to autonomous vehicles.

Challenges and Limitations

Addressing Common Concerns: Data Requirements, Computational Costs, Interpretability

Despite its strengths, deep learning also faces several challenges:

- Large Data Requirements: Deep learning models often require vast amounts of data to train effectively, which can be a significant limitation in data-scarce domains.

- High Computational Costs: The training process is resource-intensive, necessitating powerful hardware, often with specialized GPUs, which can be costly.

- Lack of Interpretability: Deep learning models, particularly complex ones, can act as “black boxes,” making it difficult to understand how they reach a particular decision or prediction. This lack of transparency can be a critical issue in fields like healthcare or law.

Tit-Bit: The concept of “Transfer Learning” in deep learning allows the use of pre-trained models on large datasets to be fine-tuned for specific tasks, significantly reducing the data requirement and computational costs.

Unlock the secrets of efficient data storage with How is Data Stored and Retrieved Inside a Disk in a Computer?

The Future of Deep Learning

Trends and Future Directions

The future of deep learning is bright and dynamic, with several key trends shaping its evolution:

- Improved Efficiency: Ongoing research aims to make deep learning models more efficient, reducing their computational and data requirements.

- Enhanced Interpretability: There’s a growing focus on making models more interpretable and transparent, a crucial aspect of their ethical and responsible use.

- Integration with Other AI Techniques: Deep learning is increasingly being combined with other AI methods, like reinforcement learning, for more sophisticated applications.

- Cross-Disciplinary Applications: Its application is expanding into new fields like climate science, linguistics, and material science, promising novel insights and breakthroughs.

Predictions on the Impact and Evolution of Deep Learning

As deep learning continues to evolve, it’s likely to become more ingrained in both our daily lives and cutting-edge research. Its ability to process and learn from vast amounts of data will be crucial in addressing some of the most pressing challenges of our time, from climate change to healthcare.

Conclusion and Your Path Forward in Deep Learning

Deep learning, an integral part of the broader field of artificial intelligence, stands out for its ability to learn from data in a way that mimics human cognition. From its foundations in linear algebra and calculus to its application in diverse fields like healthcare, finance, and autonomous driving, deep learning continues to push the boundaries of what’s possible with technology.

The journey into deep learning is an ongoing process of learning and experimentation. As this field evolves rapidly, staying updated with the latest developments, research papers, and technologies is crucial. Engage with online communities, participate in hackathons, and contribute to open-source projects to gain practical experience and insights.

Key Takeaways Table

| Aspect | Key Points |

| What is Deep Learning? | Advanced AI mimicking human brain excels in processing complex data. |

| Techniques and Frameworks | CNNs for images, RNNs for sequential data; TensorFlow, Keras, PyTorch. |

| Getting Started | Learn Python, linear algebra, and calculus; start with simple projects. |

| Mathematics and Training | Essential for model optimization; balance overfitting and underfitting. |

| Pros and Cons | Powerful in data processing; challenges in data needs and interpretability. |

| The Future | Trends towards efficiency, interpretability, and cross-disciplinary applications. |

Embrace deep learning as both a fascinating field of study and a powerful tool for innovation. Whether you’re a student, a developer, or simply an AI enthusiast, the world of deep learning offers endless opportunities for growth, discovery, and contribution to the technological advancements of our era.

Embark on a journey through B and B+ Trees in Deep Dive into B and B+ Trees and How They Are Useful for Indexing in Database.

Recent Comments