Understanding the Importance of Reinforcement Learning in Today’s Technological Landscape

Introduction of Reinforcement Learning

What is Reinforcement Learning?

Imagine teaching a child to ride a bike. You can’t program every move they make. Instead, you guide them with feedback, celebrating their balance and steering while guiding them away from falls. That’s the essence of Reinforcement Learning (RL) – a type of machine learning where an agent learns to make decisions by performing actions and receiving feedback, much like learning to ride a bike.

In the technological industry, RL is a groundbreaking approach where machines or software agents learn to make decisions by interacting with an environment. The agent receives rewards for favorable actions or penalties for undesirable ones, gradually improving its strategy to achieve the best outcome.

Keep up-to-date with data storage methods at How is Data Stored and Retrieved Inside a Disk in a Computer?

Why Reinforcement Learning is Pivotal in Modern Technology

Reinforcement Learning stands out in the modern technological landscape for several reasons:

- Adaptability: RL agents can adapt to new, unforeseen conditions. This flexibility is crucial in today’s rapidly changing technological world.

- Problem-Solving: RL excels in environments where clear, immediate feedback is available, making it ideal for complex problem-solving scenarios.

- Innovation: From gaming to autonomous vehicles, RL drives innovation by enabling systems to learn and improve from their own experiences.

Stay informed about WhatsApp System Design at WhatsApp System Design.

Understanding the Core of Reinforcement Learning

Key Concepts and Terminology

You just have to keep the four terms in mind: Agents, Environments, Actions, and Rewards.

- Agent: The learner or decision-maker.

- Environment: The setting or situation in which the agent operates.

- Actions: What the agent can do.

- Rewards: Feedback from the environment. Positive for desirable actions and negative for undesirable ones.

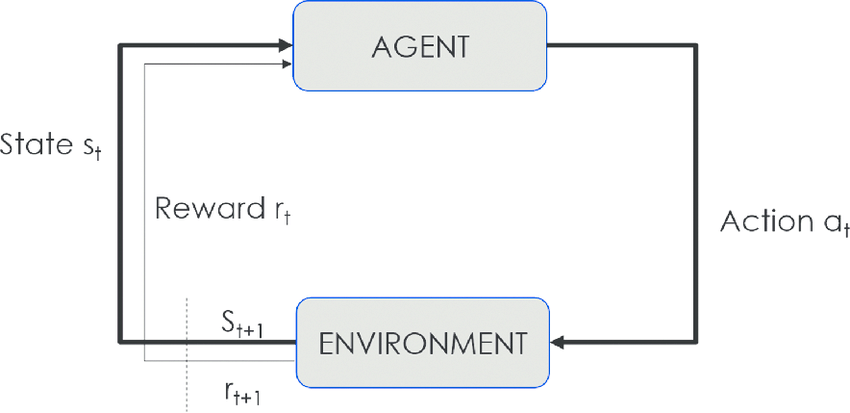

The Reinforcement Learning Cycle: A Visual Representation

Figure 1: Reinforcement learning cycle, the agent performs the action, receives a reward, and ends up in a new state. Source: https://www.researchgate.net/figure/Reinforcement-learning-cycle-agent-performs-action-receives-reward-and-ends-up-on-a-new_fig3_308838020

Figure 1 is a loop: the agent chooses an action, the environment responds with a new state and a reward, and the agent adjusts its strategy. This ongoing cycle is the heartbeat of RL.

Fundamental Math Equations in Reinforcement Learning

Understanding Reward Functions

A reward function quantifies the desirability of an environment’s state. It’s a formula that assigns a numerical value (reward) to each state, guiding the agent’s actions.

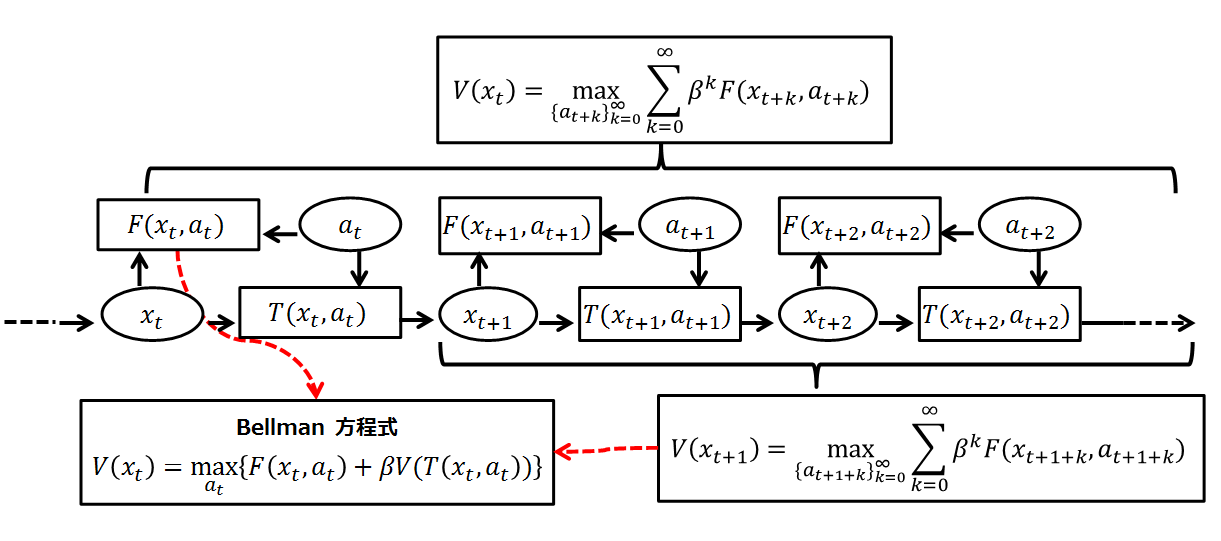

The Bellman Equation: A Mathematical Perspective

The Bellman Equation is central to RL. It provides a recursive relationship to estimate the optimal policy. In simple terms, it calculates the best possible “future reward” that can be obtained from the current state. Here’s a basic representation:

V(s) = max_a ∑_(s’) P(s’|s,a) [ R(s,a,s’) + γ V(s’) ]

Where:

- ‘V(s)’ is the value of state ‘s’.

- ‘max_a’ represents choosing the action ‘a’ that maximizes the value.

- ‘∑_(s’)’ is the sum over all possible next states ‘s”.

- ‘P(s’|s,a)’ is the probability of reaching state ‘s” from ‘s’ by taking action ‘a’.

- ‘R(s,a,s’)’ is the reward received after transitioning from ‘s’ to ‘s” by taking action ‘a’.

- ‘γ’ is the discount factor, which weighs the importance of future rewards

Figure 2: Bellman flow chart. Source: https://en.wikipedia.org/wiki/Bellman_equation#/media/File:Bellman_flow_chart.png

Tit Bit

Did you know? The concept of Reinforcement Learning was inspired by behavioral psychology and the way animals learn from their environment.

Keep learning about LSTM Networks with Learn everything there is to LSTM Network from What is LSTM and Its Applications?

Identifying Problems Suited for Reinforcement Learning

Specific Challenges and Scenarios

Dynamic Decision-Making and Optimization Problems

Reinforcement Learning shines in dynamic decision-making scenarios where the environment constantly changes and decisions need to be made sequentially. Imagine a chess game where each move depends on the evolving state of the board. RL algorithms can navigate such complexities by continuously learning and adapting their strategies.

Adaptive Systems in Unpredictable Environments

RL is also pivotal in managing systems that must adapt to unpredictable conditions. For instance, consider a self-adjusting thermostat that learns your preferences and adjusts the temperature based on various factors like time of day or outside weather.

Real-World Applications and Case Studies

Optimizing Supply Chains through Reinforcement Learning

Case Study 1: A leading e-commerce company used RL to optimize its supply chain. By simulating different supply chain scenarios, the RL model identified the most efficient ways to manage inventory, resulting in reduced costs and improved delivery times.

Personalized Medicine and Drug Development

Case Study 2: In the field of drug discovery, RL algorithms have been used to predict the effectiveness of new compounds, significantly speeding up the development process and paving the way for personalized medicine tailored to individual genetic profiles.

The Role of Reinforcement Learning in Self-Driving Cars

Self-driving cars are a testament to the prowess of RL. These vehicles use RL to make real-time decisions, navigate through traffic, and learn from diverse driving conditions, thereby improving safety and efficiency on the roads.

Stay ahead in SQL indexing with What are Indexes in SQL and Why Do We Need Them?

Deep Dive with Coding Reinforcement Learning

A Basic Reinforcement Learning Code Example

Setting Up the Coding Environment: To get started with coding in RL, you’ll need a programming environment like Python with libraries such as TensorFlow or PyTorch. These tools provide the necessary frameworks to build and test RL models.

Step-by-Step Code Implementation and Explanation: Here, we’ll create a simple RL model in Python using a famous library. The code will demonstrate a basic RL scenario where an agent learns to navigate a virtual environment.

Python

import gym

# Step 1: Environment Setup

env = gym.make(‘MountainCar-v0’) # Creating a MountainCar environment

total_episodes = 1000 # Total episodes for training

# Step 2: The Learning Process

for episode in range(total_episodes):

state = env.reset() # Resetting the environment at the start of each episode

done = False

while not done:

env.render()

action = env.action_space.sample() # Agent takes a random action

next_state, reward, done, _ = env.step(action)

# Update the state

state = next_state

if done:

break

# Step 3: Closing the Environment

env.close()

This code provides a foundation for understanding how an agent interacts with an environment, makes decisions, and learns from the outcomes.

In this code:

- We set up the ‘MountainCar’ environment from Gym.

- The agent takes actions randomly in each episode.

- The environment provides the next state and reward after each action.

- The loop continues until the episode ends (when ‘done’ is ‘True’).

Understanding the Code Through a Diagrammatic Representation

Figure 3: A blue car in a mountain car environment. Source: AI Generated Image.

Visualizing this process helps in understanding. Picture a flowchart showing the agent (a car) in the MountainCar environment. Each step represents an action taken (like accelerating or braking), leading to a new state (car’s position and velocity). The environment then gives a reward based on the new state, and the cycle repeats.

Get the latest on Hadoop for beginners at Hadoop Ecosystem for Beginners.

Challenges and Ethical Considerations in Reinforcement Learning

Data Security and Privacy Issues

In the era of big data, Reinforcement Learning (RL) systems often require access to large datasets, which can include sensitive information. This raises significant concerns about data security and privacy. For instance, an RL system trained for personalized recommendations must handle user data responsibly to prevent breaches that could lead to identity theft or other forms of cybercrime. Safeguarding this data involves implementing robust encryption methods, anonymizing datasets, and adhering to strict data governance policies.

Ethical Implications of Automated Decision-Making

As RL systems increasingly make decisions that affect human lives, ethical implications come into sharp focus. There are risks of bias in decision-making, particularly if the training data is skewed. For example, an RL-based hiring tool might develop biases against certain demographic groups if the training data reflects historical hiring biases. To mitigate these risks, it’s essential to audit training data for biases, involve diverse teams in the development of RL systems, and establish ethical guidelines that govern their deployment.

Skill Gaps and Technical Challenges

The implementation of RL involves a sophisticated blend of programming, mathematics, and domain-specific expertise. One significant hurdle is the scarcity of professionals with this unique combination of skills. Education and training programs focusing on RL are essential to cultivate a workforce capable of developing and managing these advanced systems. Additionally, interdisciplinary collaboration is key to overcoming technical challenges and ensuring that RL systems are robust, efficient, and effective.

Balancing Efficiency with Ethical Standards

There’s a fine line between optimizing for efficiency and adhering to ethical standards. For example, an RL system designed to optimize energy use in a building should not compromise on providing a safe and comfortable environment for its occupants. This balance requires a human-in-the-loop approach where decision-making by RL systems is continuously monitored and adjusted by human supervisors. It also involves establishing clear ethical guidelines that prioritize human welfare and rights and embedding these principles into the very design of RL algorithms.

Stay updated on system design techniques with Tips Approach to Tackle System Design.

Future Prospects and Predictions in Reinforcement Learning

Insights from Industry Experts

Leading voices in the field of artificial intelligence and machine learning predict that Reinforcement Learning (RL) will continue to be a major driver of innovation. Experts believe that RL’s ability to adapt and learn from dynamic environments makes it particularly suited for solving complex, real-world problems that are currently beyond the reach of traditional algorithms.

Improvements in Algorithmic Efficiency

A key area of focus in the future of RL is enhancing algorithmic efficiency. This means developing RL models that can learn more effectively from fewer data points, reducing the need for extensive training periods and large datasets. Such improvements would not only make RL more accessible but also more applicable in situations where data is scarce or expensive to obtain.

Expanding Applications Across Disciplines

The versatility of RL suggests that its future applications will extend far beyond its current use cases. Researchers are exploring the use of RL in fields like environmental conservation, where it could optimize strategies for wildlife protection or natural resource management. Similarly, in education, RL could personalize learning experiences based on individual student responses, revolutionizing how educational content is delivered and consumed.

Real-World Examples and Case Studies

- Environmental Conservation: An RL algorithm was recently used to model and optimize the movements of a fleet of drones tasked with monitoring wildlife in a large conservation area. The algorithm learned to predict the areas most likely to require surveillance, improving the efficiency of the operation while reducing costs.

- Personalized Education: An educational platform that uses an RL-based system to adapt its teaching strategies in real-time, based on student performance and engagement. This approach led to a measurable increase in student learning outcomes and retention rates.

Keep informed about database indexing in Deep Dive into B and B+ Trees and How They Are Useful for Indexing in Database.

Conclusion and The Growing Relevance of Reinforcement Learning

Reinforcement Learning has emerged as a key player in the technological landscape, renowned for its adaptability, problem-solving capabilities, and potential for innovation. Its applications, ranging from optimizing supply chains to driving advancements in autonomous vehicles and healthcare, highlight its versatility and effectiveness.

As we stand at the forefront of AI and machine learning, the exploration and application of RL hold immense potential for addressing some of the most pressing challenges of our time. Its evolving nature not only promises solutions to current problems but also opens doors to future possibilities that we have yet to imagine.

Keep up with Consistent Hashing and Load Balancing in Consistent Hashing and Load Balancing.

Recent Comments