The Impact of GPUs in AI and GPUs Elevating Machine Learning to New Heights

Introduction to the GPU’s Role in AI and Machine Learning

Graphics Processing Units (GPUs) have become a cornerstone in the field of artificial intelligence (AI) and machine learning (ML). These powerful processors have revolutionized the way we approach complex computational tasks, enabling faster and more efficient processing of large data sets. Originally designed for rendering graphics in video games, GPUs have found a new purpose in powering the computations required for deep learning and AI.

This article aims to provide a comprehensive understanding of GPUs’ pivotal role in AI and ML advancements. We target readers between 14 and 30 years old, including beginners in AI and ML, offering detailed explanations, real-world examples, and a breakdown of complex concepts into easily digestible sections.

Share the insights on Consistent Hashing and Load Balancing at Consistent Hashing and Load Balancing.

The Evolution of GPUs From Graphics to Deep Learning

GPUs have undergone a remarkable transformation since their inception. Initially focused on accelerating graphics rendering, they have evolved into powerful tools for parallel processing, ideal for matrix calculations and data processing central to AI and deep learning. The parallel processing capabilities of GPUs allow them to perform calculations much faster than traditional CPUs, especially when dealing with large datasets or complex neural networks.

Encourage healthy tech habits by sharing the principles of living a healthy lifestyle.

The Significance of GPUs in Modern AI Applications

Modern AI applications heavily rely on GPUs for their ability to process vast amounts of data quickly. This has led to significant advancements in fields like drug discovery, where GPUs facilitate complex simulations and analyses. For instance, GPU computing has enabled advancements in understanding protein-ligand interactions and drug design, leveraging deep learning techniques to analyze biological data.

Spread knowledge on LRU Cache via LRU Cache.

Why GPUs are Utilized for Machine Learning

The Need for Speed

The key to GPUs’ effectiveness in AI and ML lies in their parallel processing capabilities. Unlike CPUs, which are designed to handle a few complex operations at a time, GPUs can handle thousands of simpler, concurrent operations. This makes them particularly well-suited for the types of repetitive, data-intensive tasks required in ML, like training and inference on large neural networks.

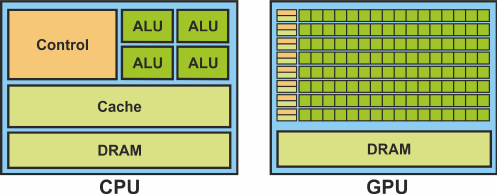

Diagram: Comparing GPU and CPU Architectures

Figure 1: Comparison of CPU versus GPU architecture. Source: https://www.researchgate.net/figure/Comparison-of-CPU-versus-GPU-architecture_fig2_231167191

To better understand the difference between GPU and CPU architectures, consider a CPU as a small group of very smart people who can quickly solve complex problems one at a time. In contrast, a GPU is like a large crowd of less skilled people who can simultaneously work on simpler tasks, making them collectively faster for specific types of computations, such as those required in AI and ML.

GPU vs. CPU in Machine Learning

A benchmarking study of GPUs vs. CPUs in ML involves comparing their performance across various algorithms and datasets. This includes evaluating the speed of training and inference, as well as the accuracy of the models produced. Factors such as the number of cores, memory bandwidth, and power efficiency are considered during this comparison.

The results of these benchmarking studies typically show that GPUs significantly outperform CPUs in ML tasks, especially when it comes to training complex models or processing large datasets. GPUs can provide speedups of 10-100x over CPU-only systems in AI workloads due to their parallel processing architecture.

Let your network explore database indexing with Deep Dive into B and B+ Trees and How They Are Useful for Indexing in a Database.

Market Trends and Popular GPU Options for Machine Learning

The GPU market for AI and ML is dominated by a few key players, with Nvidia being a prominent one. They offer a range of GPUs, such as the A100 and H100 series, which are designed specifically for AI and ML applications.

When comparing GPUs for AI applications, it’s important to consider factors like core count, memory capacity, and compatibility with AI frameworks. For instance, the Nvidia RTX 3090 and A100 are known for their high performance and support for deep learning projects, making them popular choices among data scientists and researchers.

Absolutely, let’s incorporate a table to compare the key features and specifications of top GPUs like NVIDIA A100, GeForce RTX 3090, AMD Radeon Instinct MI200, GTX 1660 Super, and RX 6700 XT. This will provide a clear and concise overview of their capabilities, aiding in understanding their roles in AI and machine learning applications.

Here’s a detailed comparison:

| Feature/Specification | NVIDIA A100 | GeForce RTX 3090 | AMD Radeon Instinct MI200 | GTX 1660 Super | RX 6700 XT |

| Architecture | Ampere | Ampere | CDNA | Turing | RDNA 2 |

| CUDA Cores | 6912 | 10496 | – | 1408 | – |

| Stream Processors | – | – | 7680 | – | 2560 |

| Tensor Cores | 432 | – | – | – | – |

| Memory | 40 GB HBM2 | 24 GB GDDR6X | 32 GB HBM2e | 6 GB GDDR6 | 12 GB GDDR6 |

| Memory Bandwidth | 1.6 TB/s | 936 GB/s | 1.23 TB/s | 336 GB/s | 384 GB/s |

| TDP | 400W | 350W | 560W | 125W | 230W |

| Intended Use | Data centers, large-scale AI/ML tasks | High-end gaming, AI/ML tasks | Data centers, high-performance computing | Budget-friendly AI/ML tasks, gaming | Gaming, AI/ML tasks |

This table provides a snapshot of the capabilities and intended uses of these GPUs, offering insights into their suitability for different AI and ML applications.

Share your system design strategies from Tips Approach to Tackle System Design.

Practical Applications of GPUs in Machine Learning Projects

Sample Machine Learning Code Using a GPU

Below is a Python code snippet that demonstrates how to use a GPU for a simple machine-learning task. This example utilizes TensorFlow, a popular deep learning framework, to train a neural network on the MNIST dataset, which comprises images of handwritten digits.

Python

import tensorflow as tf

# Ensure TensorFlow uses the GPU

print(“Num GPUs Available: “, len(tf.config.list_physical_devices(‘GPU’)))

# Define a simple neural network model

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(128, activation=’relu’),

tf.keras.layers.Dropout(0.2),

tf.keras.layers.Dense(10, activation=’softmax’)

])

model.compile(optimizer=’adam’,

loss=’sparse_categorical_crossentropy’,

metrics=[‘accuracy’])

# Load and preprocess the MNIST dataset

mnist = tf.keras.datasets.mnist

(train_images, train_labels), (test_images, test_labels) = mnist.load_data()

train_images, test_images = train_images / 255.0, test_images / 255.0

# Train the model using the GPU

model.fit(train_images, train_labels, epochs=5)

# Evaluate the model

model.evaluate(test_images, test_labels, verbose=2)

Code Explanation and GPU’s Role

This code demonstrates training a simple neural network for classifying images from the MNIST dataset. TensorFlow automatically uses the GPU if it’s available, which accelerates the training process. The neural network includes two dense layers and a dropout layer to prevent overfitting. The training process (`model.fit`) and evaluation (`model.evaluate`) are where GPUs really shine, significantly speeding up these computations.

Mathematical Equation Example in Deep Learning

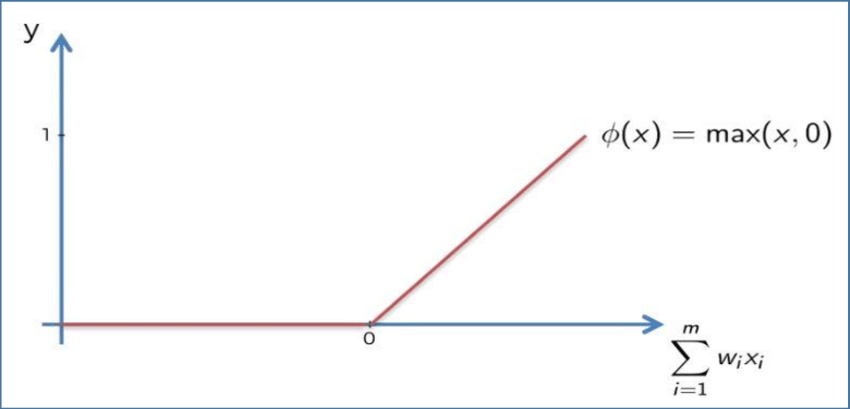

In deep learning, an essential mathematical concept is the activation function. A common activation function is the Rectified Linear Unit (ReLU), defined as:

ReLU(x)=max(0,x)

This function returns x if x is greater than zero; otherwise, it returns zero. It introduces non-linearity in the neural network, allowing the network to learn complex patterns.

Figure 2: Rectified Linear Unit (ReLU) Activation Function. Source: https://www.researchgate.net/figure/Rectified-Linear-Unit-ReLU-Activation-Function_fig1_337485695

Addressing Common Questions

One common concern is the cost and accessibility of GPUs for beginners. High-performance GPUs can be expensive, but there are budget-friendly options like the GTX 1660 Super or cloud-based GPU services that offer a more affordable entry point into GPU-accelerated computing.

Another query often raised is about future trends and sustainability. The GPU technology landscape is continuously evolving, with a focus on improving energy efficiency and reducing the carbon footprint. Future GPUs are expected to be more power-efficient while delivering higher computational power.

The Continuing Evolution of GPUs in AI

The future of GPUs in AI is promising, with continuous advancements in hardware capabilities and efficiency. We can expect to see GPUs with even greater parallel processing capabilities, lower power consumption, and more optimized architectures for AI-specific tasks.

The collaboration between AI and GPUs is also expanding into new realms like quantum computing and edge AI. This partnership is likely to open up new frontiers in AI research and applications, driving innovation across various sectors.

Introduce your peers to Hadoop with Hadoop Ecosystem for Beginners.

Conclusion and Embracing the GPU Revolution in AI

In summary, GPUs have played a transformative role in the advancement of AI and machine learning. From accelerating complex computations to enabling real-time processing and large-scale AI training, GPUs have become an indispensable tool in the AI toolkit. We encourage readers, especially those new to AI, to explore the possibilities offered by GPU-enhanced AI. Whether it’s through experimenting with different GPU models, diving into AI programming, or staying informed about the latest trends in GPU technology, there’s a wealth of opportunities to engage with this exciting field.

Help your friends enhance their SQL skills with What are Indexes in SQL and Why Do We Need Them?

Recent Comments